Key Word(s): Generators, Memory layouts

Generators¶

- Generators are useful in many places

- For example, before

asynciothey were used for coroutines in Python. - They can be used for big data-processing jobs

Example¶

def mygen(N):

for i in range(N):

yield i**2

for vals in mygen(7):

print(vals)

0 1 4 9 16 25 36

- A generator function looks like a normal function, but yields values instead of returning them.

- The syntax is the same otherwise (PEP 255 -- Simple Generators).

- A generator is different; when the function runs, it creates a generator.

- The generator is an iterator and gets an internal implementation of

__iter__and__next__.

- When

nextis called on the generator, the function proceeds until the firstyield.

- The function body is now suspended and the value in the

yieldis then passed to the calling scope as the outcome of thenext.

- When next is called again, it gets

__next__called again (implicitly) in the generator, and the next value is yielded.

- This continues until we reach the end of the function, the return of which creates a

StopIterationin next.

Any Python function that has the yield keyword in its body is a generator function.

def gen123():

print("A")

yield 1

print("B")

yield 2

print("C")

yield 3

g = gen123()

print(gen123)

print(type(gen123))

print(type(g))

<function gen123 at 0x7fd4e6ce3dd0> <class 'function'> <class 'generator'>

print(g)

<generator object gen123 at 0x7fd4e6cc2b50>

print("A generator is an iterator.")

print("It has {} and {}".format(g.__iter__, g.__next__))

A generator is an iterator. It has <method-wrapper '__iter__' of generator object at 0x7fd4e6cc2b50> and <method-wrapper '__next__' of generator object at 0x7fd4e6cc2b50>

print(next(g))

A 1

print(next(g))

B 2

print(next(g))

C 3

print(next(g))

--------------------------------------------------------------------------- StopIteration Traceback (most recent call last) <ipython-input-8-1dfb29d6357e> in <module> ----> 1 print(next(g)) StopIteration:

More notes on generators¶

- Generators yield one item at a time

- In this way, they feed the

forloop one item at a time

for i in gen123():

print(i, "\n")

A 1 B 2 C 3

How Things Work¶

We've introduced some data structures and discussed why they're important.

Now we'll go over where things live in the computer and how Python actually does things.

Process address space¶

What do we mean when we say a program is "in memory"?

- compiled code must be loaded from disk into memory.

- once your program starts, it must create (reserve) space for the variables it will use and it must store and read values from those variables.

The code, the reserved space, and the generated data all constitute a program's memory footprint.

Every operating system has a (different) convention for exactly where and how these different resources are actually laid out in memory. These conventions are called object file formats.

In Linux, the most common object format is called ELF, short for "Executable and Linkable Format".

A simplified view of our example program looks like this in memory: the stack and the heap.

The Stack¶

The stack keeps track of function calls and their data.

- Whenever a function is called, a new chunk of memory is reserved at the top of the stack in order to store variables for that function.

- This includes variables that you have defined inside your function and function parameters, but it also includes data that were generated automatically by the compiler.

- The most recognizable value in this latter category is the return address. When a function calls

return, the computer starts executing instructions at the location that the function was originally called from.

- When a function returns, it removes its stack frame from the stack. This means that at any given point, the stack contains a record of which functions the program is currently in.

- Removing the function stack from the stack becomes a problem if you want to create space for variables and then access them after the function returns.

The Heap¶

- The heap is another memory location which is not reclaimed after a function returns. It is explicitly managed, which means that you need to ask to allocate a variable in it. What's more, when you're finished with that space, you need to say so.

- This interface, explicitly requesting and releasing memory from the heap, is typically called memory management.

- In

Cyou do this directly usingmalloc/free. Python takes care of this for you using garbage collection.

How Generators Work¶

Frames are allocated on the heap!¶

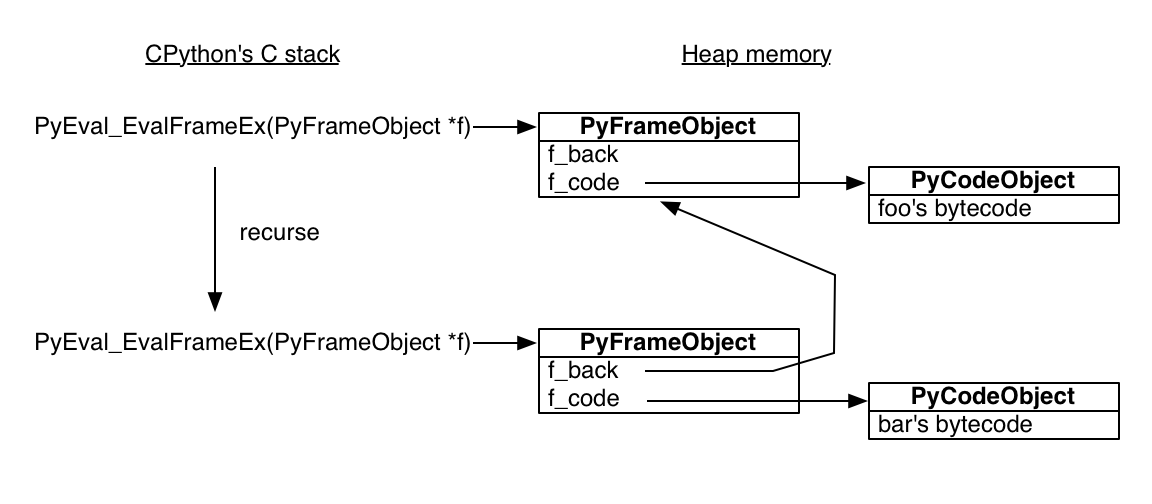

The standard Python interpreter is written in C. The C function that executes a Python function is called PyEval_EvalFrameEx. It takes a Python stack frame object and evaluates Python bytecode in the context of the frame.

But the Python stack frames it manipulates are on the heap. Among other surprises, this means a Python stack frame can outlive its function call. To see this interactively, save the current frame from within bar.

import inspect # https://docs.python.org/3.6/library/inspect.html#types-and-members

def foo():

bar()

def bar():

global frame

frame = inspect.currentframe() # Return the frame object for the caller’s stack frame.

foo()

frame.f_code.co_name # name with which this code object was defined

'bar'

caller_frame = frame.f_back

caller_frame.f_code.co_name

'foo'

Here's some code to help us understand how generators work.

#from https://bitbucket.org/yaniv_aknin/pynards/src/c4b61c7a1798766affb49bfba86e485012af6d16/common/blog.py?at=default&fileviewer=file-view-default

import dis

import types

def get_code_object(obj, compilation_mode="exec"):

if isinstance(obj, types.CodeType):

return obj

elif isinstance(obj, types.FrameType):

return obj.f_code

elif isinstance(obj, types.FunctionType):

return obj.__code__

elif isinstance(obj, str):

try:

return compile(obj, "<string>", compilation_mode)

except SyntaxError as error:

raise ValueError("syntax error in passed string") from error

else:

raise TypeError("get_code_object() can not handle '%s' objects" %

(type(obj).__name__,))

def diss(obj, mode="exec", recurse=False):

_visit(obj, dis.dis, mode, recurse)

def ssc(obj, mode="exec", recurse=False):

_visit(obj, dis.show_code, mode, recurse)

def _visit(obj, visitor, mode="exec", recurse=False):

obj = get_code_object(obj, mode)

visitor(obj)

if recurse:

for constant in obj.co_consts:

if type(constant) is type(obj):

print()

print('recursing into %r:' % (constant,))

_visit(constant, visitor, mode, recurse)

Let's write and inspect a generator.

def gen():

k = 1

result = yield 1

result2 = yield 2

result3 = yield 3

return 'done'

ssc(gen)

Name: gen Filename: <ipython-input-15-512c5f5693a6> Argument count: 0 Kw-only arguments: 0 Number of locals: 4 Stack size: 1 Flags: OPTIMIZED, NEWLOCALS, GENERATOR, NOFREE Constants: 0: None 1: 1 2: 2 3: 3 4: 'done' Variable names: 0: k 1: result 2: result2 3: result3

Setting the GENERATOR flag means that Python remembers to create a generator, not a function.

diss(gen)

2 0 LOAD_CONST 1 (1)

2 STORE_FAST 0 (k)

3 4 LOAD_CONST 1 (1)

6 YIELD_VALUE

8 STORE_FAST 1 (result)

4 10 LOAD_CONST 2 (2)

12 YIELD_VALUE

14 STORE_FAST 2 (result2)

5 16 LOAD_CONST 3 (3)

18 YIELD_VALUE

20 STORE_FAST 3 (result3)

6 22 LOAD_CONST 4 ('done')

24 RETURN_VALUE

g = gen()

dir(g)

['__class__', '__del__', '__delattr__', '__dir__', '__doc__', '__eq__', '__format__', '__ge__', '__getattribute__', '__gt__', '__hash__', '__init__', '__init_subclass__', '__iter__', '__le__', '__lt__', '__name__', '__ne__', '__new__', '__next__', '__qualname__', '__reduce__', '__reduce_ex__', '__repr__', '__setattr__', '__sizeof__', '__str__', '__subclasshook__', 'close', 'gi_code', 'gi_frame', 'gi_running', 'gi_yieldfrom', 'send', 'throw']

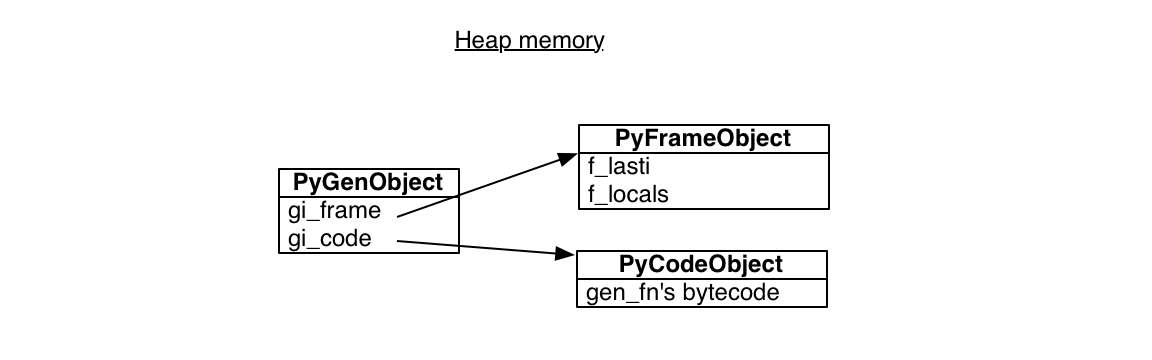

There is no g.__code__ like in functions, but there is a g.gi_code.

g.gi_code.co_name

'gen'

g.gi_frame

<frame at 0x7fd4e6da3210, file '<ipython-input-15-512c5f5693a6>', line 1, code gen>

diss(g.gi_frame.f_code)

2 0 LOAD_CONST 1 (1)

2 STORE_FAST 0 (k)

3 4 LOAD_CONST 1 (1)

6 YIELD_VALUE

8 STORE_FAST 1 (result)

4 10 LOAD_CONST 2 (2)

12 YIELD_VALUE

14 STORE_FAST 2 (result2)

5 16 LOAD_CONST 3 (3)

18 YIELD_VALUE

20 STORE_FAST 3 (result3)

6 22 LOAD_CONST 4 ('done')

24 RETURN_VALUE

All generators point to the same code object. But each has its own PyFrameObject, a frame on the call stack, except that this frame is NOT on any call stack, but rather, in heap memory, waiting to be used.

Look at f_lasti, which is the last instruction in the bytecode. This is not particular to generators. Generator isn't started yet.

g.gi_frame.f_lasti, g.gi_running

(-1, False)

next(g)

1

g.gi_frame.f_lasti, g.gi_running

(6, False)

Because the generator's stack frame was actually never put on the call stack, it can be resumed at any time, and by any caller. Its not stuck to the FILO nature of regular function execution.

g.send('a') # See PEP 342

2

g.gi_frame.f_locals

{'k': 1, 'result': 'a'}

g.send('b')

3

g.gi_frame.f_locals

{'k': 1, 'result': 'a', 'result2': 'b'}

g.send('c')

--------------------------------------------------------------------------- StopIteration Traceback (most recent call last) <ipython-input-30-544a40e6191d> in <module> ----> 1 g.send('c') StopIteration: done

Quick Generators Recap¶

- a generator can pause at a yield

- can be resumed with a new value thrown in

- can return a value.

How does Python manage memory?¶

So far, when you create a list in Python (e.g. a=[1,2,3,4,5]), we have been thinking of it as an array of integers.

But that is not actually the case.

An array would be 5 contiguous integers with some book-keeping at the beginning either on the stack or the heap.

You are all highly encouraged to read the eminently readable article Why Python is Slow from Jake Vanderplas's blog. Some of the following points come from his discussion.

Python objects are allocated on the heap: an int is not an int¶

An int is represented in Python as a C structure allocated on the heap.

The picture of this C structure looks a little bit like this:

On Jake's blog, he shows how this integer is represented:

struct _longobject {

long ob_refcnt; // in PyObject_HEAD

PyTypeObject *ob_type; // in PyObject_HEAD

size_t ob_size; // in PyObject_HEAD

long ob_digit[1];

};

It's not just a simple integer!

import ctypes

class IntStruct(ctypes.Structure):

_fields_ = [("ob_refcnt", ctypes.c_long),

("ob_type", ctypes.c_void_p),

("ob_size", ctypes.c_ulong),

("ob_digit", ctypes.c_long)]

def __repr__(self):

return ("IntStruct(ob_digit={self.ob_digit}, "

"refcount={self.ob_refcnt})").format(self=self)

num = 5

IntStruct.from_address(id(num)) # 5 is a struct allocated on heap and pointed to 200+ times!

IntStruct(ob_digit=5, refcount=242)

Why is the refcount so high? Turns out Python pre-allocates the first few integers!

Boxing and unboxing (or why Python is slow)¶

Because ints are stored in this scheme, a simple addition involves a lot of work!

So it's not a simple addition...there is all this machinery around it.

def test_add():

a = 1

b = 2

c = a + b

return c

import dis # Disassembler for Python bytecode: https://docs.python.org/3/library/dis.html#dis.dis

dis.dis(test_add) # Disassemble the x object: https://docs.python.org/3/library/dis.html#dis.dis

2 0 LOAD_CONST 1 (1)

2 STORE_FAST 0 (a)

3 4 LOAD_CONST 2 (2)

6 STORE_FAST 1 (b)

4 8 LOAD_FAST 0 (a)

10 LOAD_FAST 1 (b)

12 BINARY_ADD

14 STORE_FAST 2 (c)

5 16 LOAD_FAST 2 (c)

18 RETURN_VALUE

Python Addition

- Assign 1 to a

- 1a. Set a->PyObject_HEAD->typecode to integer

- 1b. Set a->val = 1

- Assign 2 to b

- 2a. Set b->PyObject_HEAD->typecode to integer

- 2b. Set b->val = 2

- call binary_add(a,b)

- 3a. find typecode in a->PyObject_HEAD

- 3b. a is an integer; value is a->val

- 3c. find typecode in b->PyObject_HEAD

- 3d. b is an integer; value is b->val

- 3e. call

binary_add<int, int>(a->val, b->val) - 3f. result of this is result, and is an integer.

- Create a Python object c

- 4a. set c->PyObject_HEAD->typecode to integer

- 4b. set c->val to result

And that example was just to add two ints where binary_add is optimized in C!

If these were user-defined classes, there would be additional overhead from dunder methods for addition!

What About Lists?¶

A Python list is represented by the following struct:

typedef struct {

long ob_refcnt;

PyTypeObject *ob_type;

Py_ssize_t ob_size;

PyObject **ob_item;

long allocated;

} PyListObject;

Notice the PyObject**. This points to the contents of the list. What is this a list of? This is a list of PyObject*.

Each of those pointers, when dereferenced, gives us an IntStruct. The ob_size tells us the number of items in the list.

Thus this is an array of pointers to heap allocated IntStructs: Simple Example.

class ListStruct(ctypes.Structure):

_fields_ = [("ob_refcnt", ctypes.c_long),

("ob_type", ctypes.c_void_p),

("ob_size", ctypes.c_ulong),

("ob_item", ctypes.c_long), # PyObject** pointer cast to long

("allocated", ctypes.c_ulong)]

def __repr__(self):

return ("ListStruct(len={self.ob_size}, "

"refcount={self.ob_refcnt})").format(self=self)

L = [1,2,3,4,5]

Lstruct = ListStruct.from_address(id(L))

Lstruct

ListStruct(len=5, refcount=1)

PtrArray = Lstruct.ob_size * ctypes.POINTER(IntStruct)

L_values = PtrArray.from_address(Lstruct.ob_item)

[ptr[0] for ptr in L_values] # ptr[0] dereferences the pointer

[IntStruct(ob_digit=1, refcount=2308), IntStruct(ob_digit=2, refcount=1071), IntStruct(ob_digit=3, refcount=517), IntStruct(ob_digit=4, refcount=472), IntStruct(ob_digit=5, refcount=241)]

What do others do?¶

Julia:

In the most general case, an array may contain objects of type Any. For most computational purposes, arrays should contain objects of a more specific type, such as Float64 or Int32.

a = Real[] # typeof(a) = Array{Real,1}

if (f = rand()) < .8

push!(a, f) # f will always be Float64

end

Because a is a an array of abstract type Real, it must be able to hold any Real value. Since Real objects can be of arbitrary size and structure, a must be represented as an array of pointers to individually allocated Real objects. We should instead use:

a = Float64[] # typeof(a) = Array{Float64,1}

which will create a contiguous block of 64-bit floating-point values.

C:

You allocate explicitly either on the heap or stack.

In Python you can append to lists. So what's an ob_size doing in our struct then?

typedef struct {

long ob_refcnt;

PyTypeObject *ob_type;

Py_ssize_t ob_size;

PyObject **ob_item;

long allocated;

} PyListObject;

Turns out Python lists are implemented in something called a dynamic array.